Key findings

- The report draws attention to the pervasive global usage of artificial intelligence (AI)-generated disinformation for political purposes. Deepfake technology has become a powerful tool for swaying public opinion, disseminating misleading information, and subverting democratic processes.

- Deepfake technology is often used to undermine opposition parties’ vote banks, damage their reputations, and manipulate public opinion.

- Political parties try to strengthen their position while targeting their opponents by fabricating and spreading false narratives through synthetic media.

- This report provides an overview of deepfake-related events that occurred throughout election seasons in France, Taiwan, the UK, Indonesia, and Colombia. These examples show how easily disinformation operations using deepfake technology can manipulate political systems.

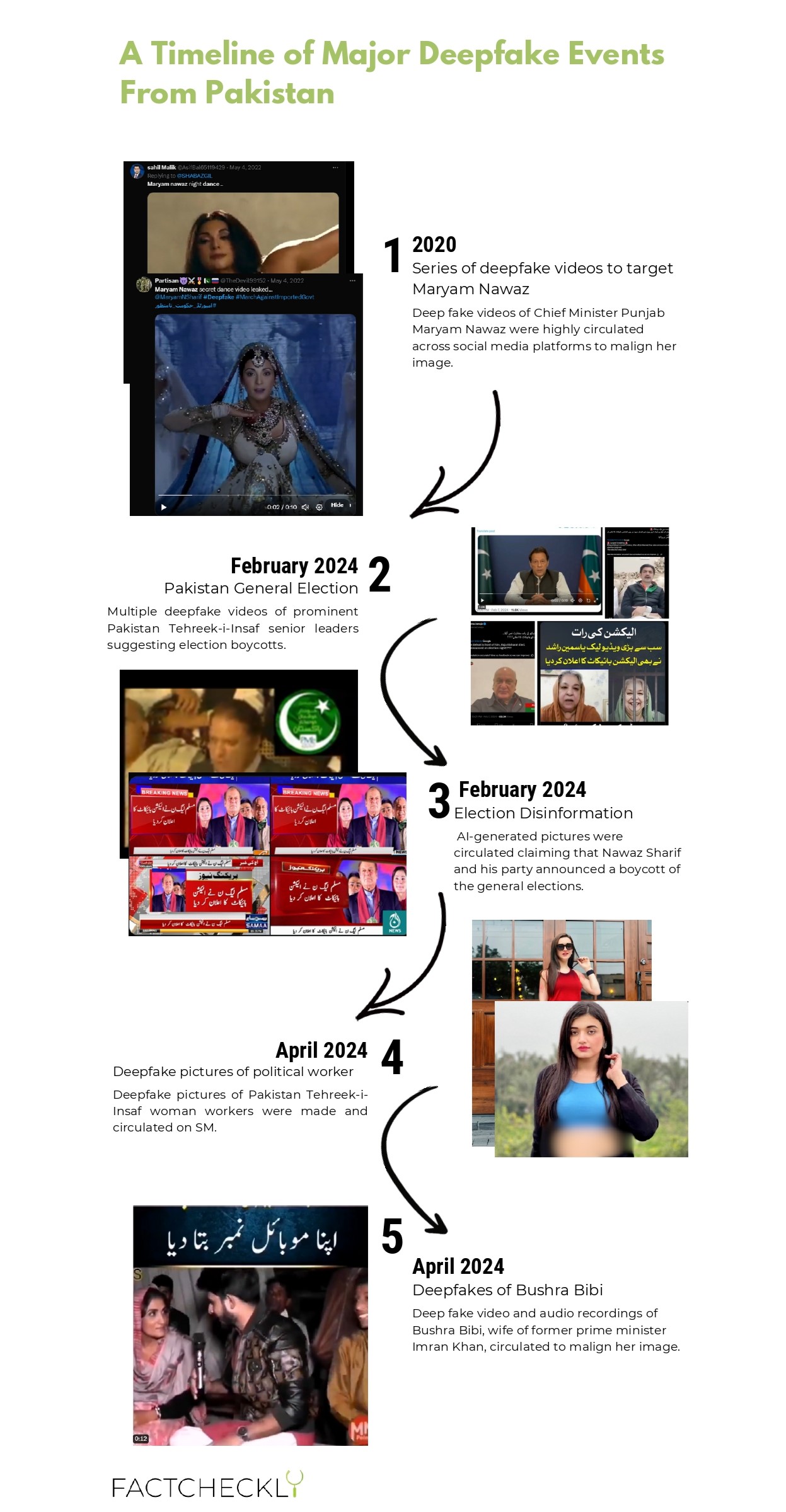

- A timeline of deepfake instances, primarily from South Asian countries like Bangladesh, Pakistan, and India, is provided in the report. These events demonstrate how deepfake technology is becoming more common in the region and how it affects media integrity, social stability, and political discourse.

- The analysis emphasises how urgently laws governing deepfake technology are needed, especially in nations like Pakistan where its improper use poses serious risks to media integrity and democratic government.

- Effective laws and regulations are essential to curb the spread of deepfake disinformation and safeguard the integrity of electoral processes.

- Collaboration across sectors is necessary to address the issues raised by deepfake technology, including between governments, tech corporations, civil society organizations, and international authorities.

- To counteract misinformation, improve media literacy, and fortify democratic resilience against new threats, cooperative efforts are required to establish comprehensive policies.

- Research and development of deepfake detection and mitigation must continue. It is possible to enable stakeholders to detect, counter, and lessen the detrimental effects of AI-generated misinformation on political processes and social cohesion by investing in innovative technologies.

Background

In an age where artificial intelligence (AI) is increasingly shaping our realities, the proliferation of AI-generated disinformation poses a significant threat to societies worldwide. Deepfake technology, a form of synthetic media generated by artificial intelligence, has emerged as a potent tool in shaping political and religious narratives, particularly during election seasons. With its ability to manipulate audio and video content to create hyper-realistic simulations of individuals, deepfakes have been increasingly deployed by political rivals and extremist groups to undermine opponents and sway public opinion. This report examines the intersection of deepfakes with politics, focusing on examples from South Asia, particularly Pakistan, India, and Bangladesh. Through a timeline of significant events and cases, the societal impact and implications of deepfakes will be explored, followed by recommendations for safeguarding human rights in the age of deepfake technology.

Deepfake Usage and Politics

In the context of elections, deepfakes have been weaponized to spread disinformation, manipulate voter perceptions, and sabotage electoral processes. Political adversaries often exploit deepfake technology to fabricate videos or audio recordings of political opponents making controversial statements or endorsing false narratives. The big risk is that we as a society has reached to a point where people can no longer trust what they see or hear.

With the use of advanced artificial intelligence algorithms, deepfakes can produce extremely realistic audio and video recordings that accurately portray people saying or doing things they never did. This technology is being used more in politics to stoke division in communities, manipulate public opinion, and discredit political rivals. Deepfakes have been used as a powerful weapon for spreading misinformation and influencing election results. They have included fake speeches, deceptive campaign commercials, and altered footage of political events like rallies and debates.

During election seasons, incidents of deepfake usage for political influence have been documented in a number of countries, emphasizing the phenomenon’s global reach and significance. For instance, a candidate for the Europe Écologie Égalité party in France acknowledged utilizing artificial intelligence to improve her picture on her campaign poster. A chat between a well-known journalist and Michal Šimečka, the leader of the Progressive Slovakia party, was purportedly captured on a doctored audio tape in Slovakia. Civic Platform, the main opposition party in Poland, came under fire for dubbing emails with artificial intelligence-generated sounds. According to The New York Times report, both of the major presidential contenders in Argentina made substantial use of AI to modify photographs and produce deepfakes for their campaigns.

Similar concerns were raised over the potential dissemination of fake audio recordings produced by AI that were directed at political candidates during the October 2023 regional elections in Colombia. Reports of fake audio and video recordings also surfaced in India during the 2024 general election, grabbing the interest of social media users and all political parties. According to the Financial Times report, pro-government media sources in Bangladesh spread disinformation created by artificial intelligence. Taiwan accuses China of using artificial intelligence to launch a huge disinformation campaign amid the elections to influence the electoral process.

Former Prime Minister of Pakistan Imran Khan’s party utilized AI to create campaign messages, including speeches based on notes passed from prison. The recent Indonesian election saw a deepfake video of the late President Suharto to encourage people to vote. Deepfake videos of Defence Minister Prabowo Subianto and Presidential candidate Anies Baswedan speaking fluent Arabic went viral in Indonesia. Over 100 deepfake video advertisements impersonating Prime Minister Rishi Sunak were promoted on Facebook, along with an AI-generated audio clip of Mayor Sadiq Khan.

The timeline below reflects the interconnected nature of AI-generated disinformation, spanning across different regions and highlighting its impact on electoral integrity, social stability, and democracy.

A Tale of Deepfake Content from South Asia

Pakistan

In Pakistan’s 2024 general elections, Pakistan Tehreek-e-Insaf (PTI) used artificial intelligence (AI) to efficiently address the numerous challenges they faced during the election. For example, the party was able to effectively host virtual assemblies and engage people with AI-generated speeches of the imprisoned leader, Imran Khan, ensuring his continuing public presence, the party has unveiled a digital portal to assist voters in identifying the party’s candidates and symbols.

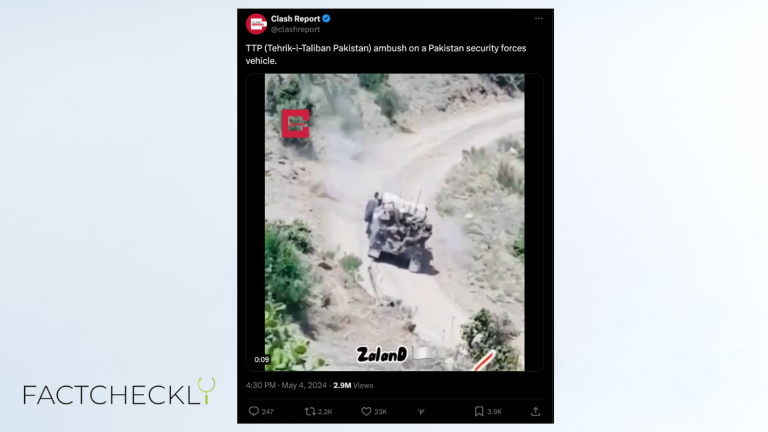

On the other hand, the competitor party of PTI, Pakistan Muslim League – Nawaz (PML-N) supporters posted deepfake videos of the senior leadership of PTI a day before the general elections. The purpose of circulation of such deep fake videos was to mislead the voters and create panic. Deepfake videos and fake audio clips were generated of Imran Khan, and a deep fake video of Sher Afzal Marwat was circulated a day before the general elections to create chaos. Similarly, deepfake videos of Yasmeen Rashid and Raja Basharat Raja were circulated to announce a boycott of the 2024 general elections. Some of these clips were so convincing that even some media houses fell for them and relayed reports for millions of their audience. PTI workers have accused the Pakistan Muslim League (Nawaz), the party leading the ruling coalition, for using deepfake videos and audio as a tool to spread confusion among the party supporters to influence election results. Similarly, AI-generated pictures were circulated by Pakistan Tehreek-i-Insaaf supporters claiming that Nawaz Sharif and his party announced a boycott of the general elections.

Deepfake pictures and videos have been used to malign the image of political leaders and workers. Maryam Nawaz, Pakistan Muslim League-Nawaz (PML-N) Senior Vice President, Chief Minister Punjab fell prey to deep fake videos in 2022. Most of her deepfake videos were taken down and arrests were made for uploading a deep fake video of the PML-N’s woman leader on social media. Similarly, a series of deepfake pictures and videos have been circulated on social media platforms targeting Former Prime Minister Imran Khan’s wife, Bushra Bibi, and a Pakistan Tehreek-i-Insaaf woman worker.

India

India has also witnessed the proliferation of deepfakes during election seasons, with instances of fabricated videos targeting political leaders to tarnish their reputations or incite communal violence. Deepfake videos of Bollywood actors Aamir Khan and Ranveer Singh went viral on social media, in videos actors can be seen criticizing the Bharatiya Janata Party (BJP) and supporting the Indian National Congress weeks before India’s general election in 2024.

In 2020, a deepfake video of BJP member Manoj Tiwari went viral. In the original video, Tiwari speaks in English, criticizing his political opponent Arvind Kejriwal, and encouraging voters to vote for the BJP. The second video has been manipulated using deepfake technology so his mouth moves convincingly as he speaks in Haryanvi, the Hindi dialect spoken by the target voters for the BJP.

A deepfake video featuring the deceased former Chief Minister of Tamil Nadu, M. Karunanidhi appeared before a live audience on a large projected screen, to congratulate his friend and fellow politician TR Baalu. In 2023, Instagram reels of Indian Prime Minister Narendra Modi singing in regional languages have gone viral recently. A video showed the leader of the ruling party, KT Rama Rao, calling on voters to vote for the Congress Party is another major example of artificial intelligence usage for political gains.

Bangladesh

Artificial intelligence in political campaigns is still at an experimental level in Bangladesh but this election’s rapid usage was witnessed. Bangladesh’s ruling party also managed to uphold worldwide worries by harvesting the power of the new things in tech to crack down on the opposition through artificial intelligence. On the day of the 12th National Assembly elections, a fake video was seen to be circulating on social media, in which Abdullah Nahid Nigar, an independent candidate, announced that she had withdrawn from the election.

Similarly in 2023, two deepfake videos of Nipun Roy Chowdhury, a member of the BNP Central Executive Committee, and Rashed Iqbal Khan, acting president of Bangladesh Jatiotabadi Chatra Dal were also circulated. A deepfake video of an opposition lawmaker in Bangladesh wearing a bikini went viral. The viral video sparked country-wide outrage.

Impact Analysis

The incidents outlined in this report underscore the pervasive nature of AI-generated disinformation and its detrimental effects on democratic processes and social cohesion. Deepfakes have emerged as a powerful tool for spreading false narratives, manipulating public opinion, and inciting violence.

The proliferation of deepfakes poses significant societal challenges, including erosion of trust in political institutions, polarization of communities, and amplification of disinformation. Deepfakes not only undermine democratic processes but also exacerbate social tensions, fueling violence and extremism in regions affected by ethnic, religious, or political divides. Furthermore, the rapid advancement of deepfake technology threatens individual privacy, integrity, and agency, raising fundamental questions about the reliability of digital media and the authenticity of human communication.

Deepfakes and The Laws

The rapid advancement of AI deepfake technology has outpaced regulatory and detection efforts, posing challenges for policymakers, tech companies, and law enforcement agencies. Deepfake content has infringed upon individuals’ rights to privacy, reputation, and freedom of expression, particularly targeting marginalized groups and public figures. Similarly, it has raised concerns about national security, foreign interference, and the potential for cyber-enabled threats to geopolitical stability.

Nations all over the world are enacting legislation to control the application of AI. The European Commission put up the first EU AI regulation framework in April 2021. It claims that AI systems that have a variety of uses are assessed and categorized based on the risk they present to consumers. There will be varying degrees of regulation depending on the danger levels. President Biden signed an executive order on safe, secure, and reliable artificial intelligence on October 30, 2023.

In the context of South Asia, the Ministry of Information Technology and Telecommunication of Pakistan has drafted the National Artificial Intelligence (AI) Policy, the policy is crafted to focus on the equitable distribution of opportunity and responsible use of AI. India currently lacks specific laws directly addressing generative AI, deepfakes, and AI-related crimes, although the government has said relevant legislation is under process.

The Way Forward

Addressing the global impacts of AI deepfakes requires a comprehensive and collaborative approach. Governments must enact legislation to regulate the creation, dissemination, and use of deepfake content, imposing penalties for malicious manipulation and exploitation. Tech companies should invest in AI detection tools and algorithms to identify and mitigate the spread of deepfake disinformation on digital platforms.

Education and media literacy initiatives are essential to empower individuals to recognize and critically evaluate deepfake content, fostering informed digital citizenship and responsible online behavior. Multilateral efforts are needed to coordinate responses to the global threat of AI deepfakes, including information sharing, capacity building, and diplomatic engagements.

In conclusion, the rise of deepfake technology presents unprecedented challenges to the integrity of political processes and religious discourse. Addressing these challenges requires concerted efforts from governments, civil society, and technology stakeholders to mitigate the harmful effects of deepfakes and uphold the principles of democracy, truth, and human dignity.

+ There are no comments

Add yours